Deepfakes

2023 NOV 10

Mains >

Science and Technology > IT & Computers > Artificial intelligence

REFERENCE NEWS:

- Recently, the Central Government has issued comprehensive guidelines to social media companies to address the identification and removal of misleading and deepfake videos. The spread of deepfakes on social media, especially after a viral video featuring an actress, led to decisive government action.

|

GUIDELINES TO SOCIAL MEDIA COMPANIES:

The guidelines issued to social media companies include the following key points:

- Vigilance and Identification: Companies are urged to exercise appropriate caution and implement measures to identify false information and deepfake content, particularly content that violates established rules, regulations, and user agreements.

- Timely Action: Swift action should be taken within the specified time frame as prescribed by the IT Rules of 2021.

- User Responsibility: Users are explicitly instructed not to host or promote any misleading or deepfake content

- Reporting Mechanism: Any such content reported by users should be promptly removed within 36 hours.

- Compliance and Accountability: Social media intermediaries are reminded that non-compliance with IT Act provisions may lead to penalties under IT Rules 2021 and loss of protections under Section 79(1) for social media intermediaries.

|

WHAT IS A DEEPFAKE?

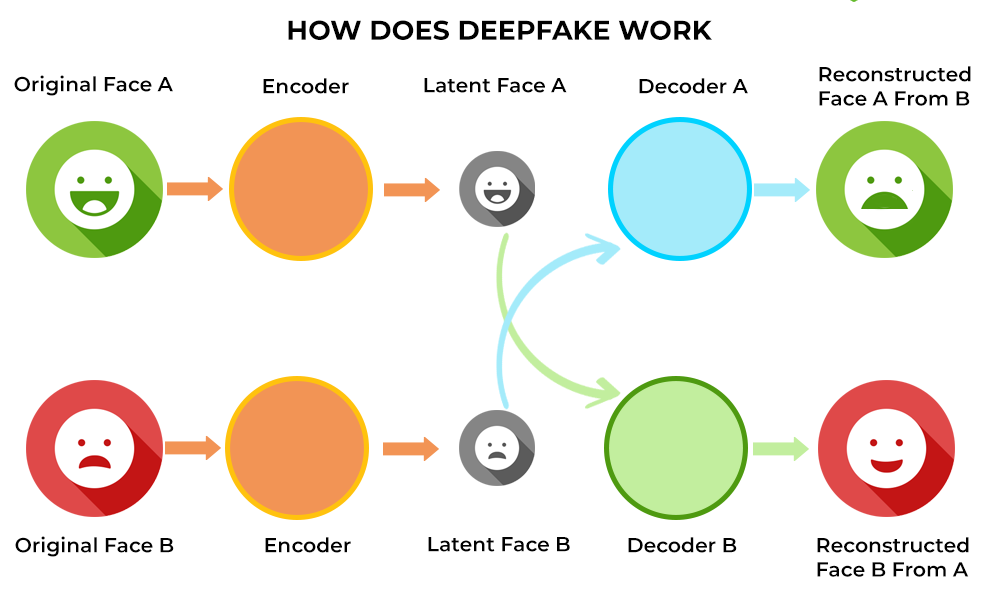

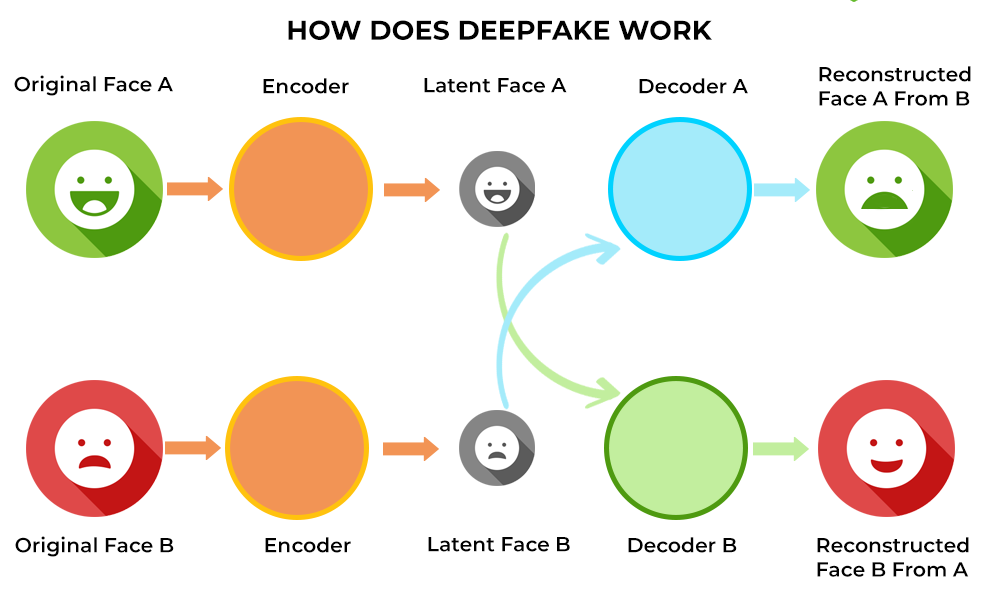

- Deepfakes are digital media (video, audio, and images) edited and manipulated using Artificial Intelligence.

- To be precise, deepfakes are synthetic media (fake) generated using the Artificial Intelligence technique of deep (deep) learning.

- The term deepfake originated in 2017, when an anonymous Reddit user called himself “Deepfakes.” This user manipulated Google’s open-source, deep-learning technology to create and post pornographic videos.

- Artificial Intelligence (AI) refers to the ability of machines to perform cognitive tasks like thinking, perceiving, learning, problem solving and decision making.

- Deep learning is a machine learning technique that teaches computers to do what comes naturally to humans: learn by example.

|

POSITIVE APPLICATIONS OF AI-GENERATED SYNTHETIC MEDIA (OR DEEPFAKES):

- Education:

- Deepfake technology facilitates numerous possibilities in the education domain. It can help an educator deliver innovative lessons that are far more engaging than traditional visual and media formats.

- For example, AI-Generated synthetic media can bring historical figures back to life for a more engaging and interactive classroom.

- Accessibility:

- AI-generated synthetic media can help make the accessibility tools smarter and, in some cases, even more affordable and personalizable.

- For example, Microsoft’s Seeing.ai and Google’s Lookout leverage AI for recognition and synthetic voice to narrate objects, people, and the world.

- Art:

- Cultural and entertainment businesses can use deepfakes for artistic purposes.

- For example, in the video gaming industry, AI-generated graphics and imagery can accelerate the speed of game creation.

- Autonomy and expression:

- AI-generated synthetic media can help human rights activists and journalists to remain anonymous in dictatorial and oppressive regimes.

- Deepfake can be used to anonymize voice and faces to protect their privacy.

- Forensic science:

- AI-generated synthetic media can help in reconstructing the crime scene using inductive and deductive reasoning and evidence.

CONCERNS ASSOCIATED WITH DEEPFAKE TECHNOLOGY:

- Deepfakes targeting women:

- According to a study conducted by Deep Trace Labs, 96% of all deepfake videos online are pornographic, and 99% of deepfake pornography targets women.

- Deepfake porn can lead to emotional distress, reputational damage, abuse, and material consequences like financial loss and job loss for women, reducing them to sexual objects.

- Deepfakes to carry out frauds:

- Malicious actors can exploit individuals' lack of awareness to defraud them using audio and video deepfakes, extracting money, confidential information, or favours.

- For instance, a deepfake voice scam fooled a UK energy firm's CEO into transferring €220,000 to a fake Hungarian supplier.

- Potential to subvert the government:

- The potential of deepfake technology for disinformation has grave implications for national security and even the stability of the government.

- For example, in 2019, a deepfake video of Gabon's President, Ali Bongo, raised concerns about his fitness to rule and led to a coup attempt by the country's military.

- Impact on mainstream media:

- Recent examples show that even mainstream media outlets occasionally become victims of disinformation campaigns using deepfakes, further eroding public confidence in their credibility.

- For example, a fake image of an explosion at the US Pentagon spread across social media, and was mistakenly aired by Indian TV news channels.

- ‘Hybrid warfare’

- Adversarial states have leveraged deepfake technology as a major component of their ‘hybrid warfare’ or ‘grey zone tactics’. The European Union (EU) has termed this phenomenon of the usage of deepfakes as 'foreign information manipulation interference’ (FINI).

- For example, during the Russia-Ukraine conflict, a deep fake video of Ukrainian President Volodymyr Zelenskyy asking his troops to surrender went viral.

- Also, a misleading video circulated online during the 2020 Galwan clash between India and China, falsely portraying a violent encounter between their soldiers.

- Liar’s dividend:

- Another concern associated with deepfake is the liar’s dividend: an undesirable truth is dismissed as deepfake or fake news.

- Leaders may weaponise deepfakes and use fake news and an alternative-facts narrative to replace an actual piece of media and the truth. For instance, during Donald Trump's presidency, he and his administration frequently dismissed unflattering news reports or media coverage as "fake news."

- Disrupts elections and undermines democracy:

- Deepfakes can aid in altering the democratic discourse, undermine trust in institutions, and impair diplomacy. False information about institutions, public policy, and politicians powered by a deepfake can be exploited to spin the story and manipulate belief.

- For instance, a deepfake can damage a political candidate's image and disrupt elections by spreading false depictions of unethical behavior.

- Allow authoritarian regimes to flourish :

- For authoritarian regimes, it is a tool that can be used to justify oppression and disenfranchise citizens.

- For example, China has utilized AI-generated news anchors through deepfake technology to advance Chinese Communist Party (CCP) interests and spread anti-US propaganda.

- Reputation and Privacy:

- Individuals' reputations can be tarnished by malicious deepfake content, and their privacy can be violated.

- Can be used by non-state actors:

- Non-state actors can use deepfakes to manipulate public sentiment by creating fabricated videos or audio recordings of their adversaries for the purpose of stirring anti-state sentiments.

- For instance, a terrorist organization can easily create a deepfake video showing soldiers dishonoring a religious place to flame existing anti-state emotions and cause further discord.

|

STATUS OF DEEPFAKES REGULATION IN INDIA:

So far, India has not enacted any specific legislation to deal with deepfakes, though there are some provisions in the IT Act 2000 and the Indian Penal Code that can be invoked to counter deepfakes.

- Provisions under the IT Act 2000:

- Section 66D: Punishes impersonation using a computer resource with up to three years in jail and a fine up to Rs 1 lakh.

- Section 66E: Deals with unauthorized image capture and dissemination, carrying a penalty of up to three years in jail or a fine of up to Rs 2 lakh.

- Sections 67 and 67A: Punish the electronic publication of sexually explicit material.

- The IPC addresses deepfakes under various sections: Section 500: Defamation, Sections 463, 468, 469: Forgery and its use to harm reputation, Section 506: Criminal intimidation, Section 354C: Voyeurism etc.

However, these provisions may not fully cover all the complex issues related to deepfakes?.

|

WAY FORWARD:

- Media literacy:

- Media literacy for consumers and journalists is the most effective tool to combat disinformation and deepfakes.

- Media literacy efforts must be enhanced to cultivate a discerning public. As consumers of media, the public must have the ability to decipher, understand, translate, and use the information they encounter.

- Regulations to curb malicious deepfakes:

- Meaningful regulations developed through a collaborative discussion with the technology industry, civil society, and policymakers can facilitate disincentivizing the creation and distribution of malicious deepfakes.

- Technology:

- There should be easy-to-use and accessible technology solutions to detect deepfakes, authenticate media, and amplify authoritative sources.

- As innovation in deepfakes gets better, AI-based automated tools must be invented for the detection of deepfakes.

- Also, blockchains are robust against many security threats and can be used to digitally sign and affirm the validity of a video or document.

|

Steps taken by various countries to combat deepfakes

- The European Union:

- The European Union has an updated Code of Practice to stop the spread of disinformation through deepfakes.

- The revised Code requires tech companies including Google, Meta, and Twitter to take measures in countering deepfakes and fake accounts on their platforms.

- Introduced in 2018, the Code of Practice on Disinformation brought together for the first time worldwide industry players to commit to counter disinformation.

- The United States:

- In July, 2021, the U.S. introduced the bipartisan Deepfake Task Force Act to assist the Department of Homeland Security (DHS) to counter deepfake technology.

- Some States in the United States such as California and Texas have passed laws that criminalise the publishing and distributing of deepfake videos that intend to influence the outcome of an election.

|

CONCLUSION:

- Deepfakes can have positive uses with AI technology but also carry risks of misuse. To combat the threats associated with deepfakes, along with better regulation and technological interventions, the public must take responsibility for being a critical consumer of media on the Internet and thinking and pausing before sharing on social media.

PRACTICE QUESTION:

Q. Discus the potential risks associated with deepfakes and suggest measures to overcome them.(10 marks, 150 words)